Changing what's possible

“When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong.” Arthur C. Clarke

There’s not much I remember from high school physics classes, but one thing I still recall vividly is our teacher’s proclamation that nuclear decay is immutable. And, when we look around, that assertion seems to make sense: discussions about nuclear waste wouldn’t be so much of a big deal if we had a technique to accelerate nuclear decay. Apply it to radioactive waste and -- swoosh -- it would be harmless.

But while changing nuclear decay is commonly deemed impossible, there are many other things in physics that are considered possible even if not yet realized. Consider, for instance, the declared target of the Battery 500 Consortium to double the energy density of batteries (the capacity per unit weight) from current levels. In contrast to the aspiration of accelerating nuclear decay, such a target is comparatively uncontroversial. Who -- or what -- then determines what is a legitimate research target and what not?

As we’ll see, what is considered possible is always very much a function of the specific knowledge domain associated with a particular problem. And a look at the history of science reveals that things change over time. Take the case of nuclear fission: To break up atomic nuclei into similar sized pieces was once believed to be impossible but later became accepted knowledge. In fact, the female physicist Ida Noddack was ridiculed as late as 1934 for this suggestion, only to be proven right by 1938.

Today I’m a researcher at MIT and I co-lead a research project funded by the US Department of Energy’s innovation agency ARPA-E. In this project we seek to not only modify nuclear decay but to achieve much greater control over nuclear reactions more generally. This includes accelerating fusion reactions to such an extent that they can occur at room temperature -- an aspiration that has long been considered impossible. But ARPA-E’s very own slogan is “changing what’s possible” and this article lays out some critical aspects of what this entails.

#################################

To find out how notions of possible and impossible come about we need to have a closer look at how science is organized. The philosopher of science Thomas Kuhn emphasized how scientists tend to form tribal-like communities, in which certain commonly shared models, methods and beliefs become established and passed from one generation of scientists to another.

An example is the common practice in physics of treating objects as point masses, as if all their mass is concentrated at a single point (the center of mass). This simplification makes the math more tractable, especially in problems related to gravity and motion. Kuhn discusses this as an example of the kind of simplifying assumptions that become standard practice within a particular scientific community. These kinds of assumptions can be so deeply embedded that they're often not even recognized as such.

The set of models, methods and beliefs is then called a paradigm, or in the words of the economist Brian Arthur, a domain. We can think of this as a certain limited composition of knowledge, the set of knowledge that young researchers get inducted to in a typical training program.

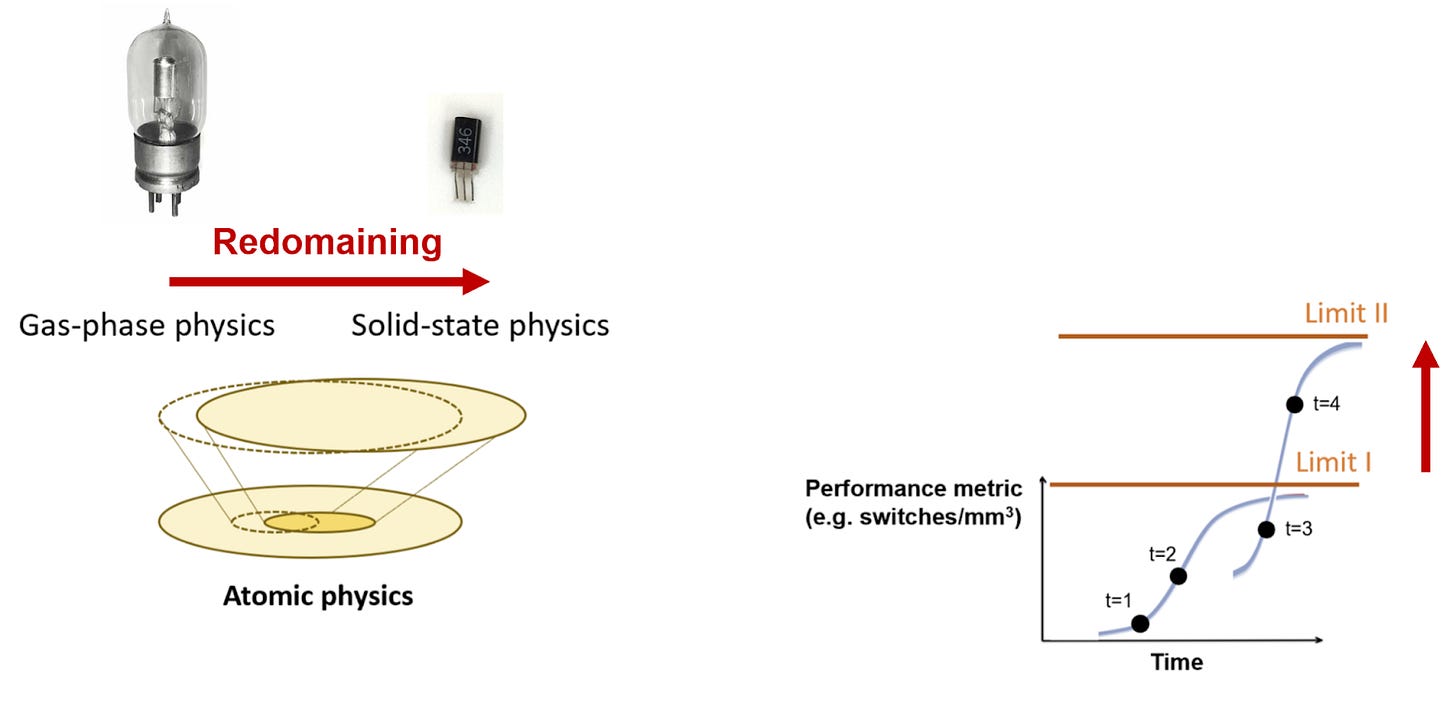

But domains can change over time -- what Arthur called redomaining: the composition of knowledge in a domain gets shifted or replaced. This can be seen nicely when we look at the transistor, the perhaps most impactful invention of the 20th century. If you have a phone in your hand or in your pocket, then you have billions of transistors on you. What the transistor is at its core is an electric switch. To build electric switches so small that many of them fit into one’s pocket was once considered impossible, back in the early 20th century. At that time, small vacuum tubes -- akin to light bulbs -- were used as electric switches and it was inconceivable to make them smaller than about a cubic centimeter. This limit was obviously overcome -- by far -- but only because the domain of electronics shifted away from gas-phase physics to solid-state physics, a classical case of redomaining.

The key point here is this: Limits of technology are always relative to the specific knowledge domain that is linked to that technology. As that knowledge domain shifts, the limits can shift with it. Whenever this happens in a major way, many observers tend to be stunned how a limit that was once considered immutable has suddenly been overcome. But it’s much less mysterious if you keep the above in mind.

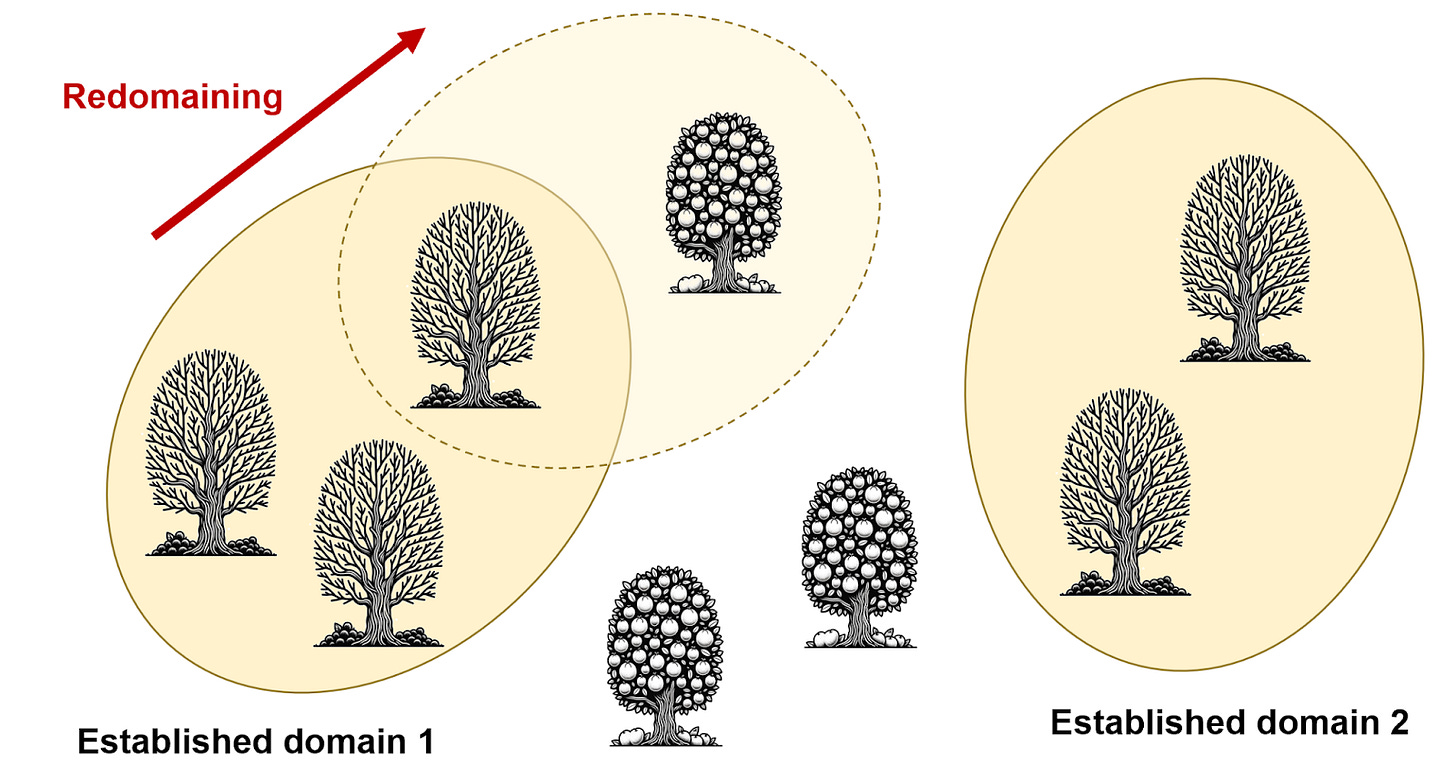

Recognizing the importance of redomaining also provides explanations to other issues. For instance, economists and sociologists have found that science output has been stagnating. Some have attributed this stagnation to scientists running out of new ideas, to have picked all the low-hanging fruit. This may be true when considering only the fixed structure of knowledge domains in their present static forms. But this notion doesn’t hold up when we allow for redomaining to occur.

Picture an orchard with multiple fences, where trees within each fence have been picked empty but lush trees are standing in between. The remedy is then to shift a fence so as to include some of the lush trees in the spaces that had remained untouched. Redomaining, as a shift in the framing of different areas of science, can lead to such shifts in knowledge space. New things become possible, and new tiers of low-hanging fruit become accessible.

All this raises the question: If redomaining bears the potential for changing what’s possible, can we have more of it please?

The unsatisfying answer is that redomaining seems to be becoming more and difficult. As Michael Nielsen and Canjun Qiu point out in a recent essay, there is much resistance to the formation of new fields. The same applies to adjustments of field boundaries.

What gets in the way? First of all, there’s a lack of awareness that redomaining is important. Few explicit efforts exist to promote it. And even worse, there are hurdles to redomaining intrinsic to the present organization of science.

The organization of education and research is built around existing knowledge domains and the social and professional identities of researchers become intertwined with it. As Kuhn states, what occurs over time is “an immense restriction of the scientist’s vision and a considerable resistance to paradigm change. The science has become increasingly rigid.” Incumbent paradigms become deeply tied to major scientific institutions: universities, professional organizations, and funding agencies support existing paradigms and may be reluctant to support research that challenges them. Such organizations and individuals may then develop vested interests (commercial, ideological, political) in the status quo.

Here we need to recognize that interests of scientists and of society don’t always align. Society stands to benefit from efforts to explore different kinds of redomaining potential; but scientists -- especially senior and highly established ones -- often stand to lose.

Sometimes hurdles to redomaining can arise inadvertently. Take, for instance, the case of the senior physicist Max Tegmark who argued that so-called coherent quantum effects are too short-lived to meaningfully affect brain function (which has become known as the argument that living organisms are “too warm, wet, and noisy” for coherent quantum effects). While his remarks were perhaps well-intended, for many years they represented an insurmountable obstacle for younger researchers to work on related topics. Reviewers of papers and funding proposals would simply refer to Tegmark’s position as reason to dismiss the whole approach. However, by now, careful experimental work showed that nature has indeed evolved mechanisms to stabilize coherent quantum effects in warm, wet, and noisy environments. A recent paper even demonstrated experimentally that such effects can occur in protein structures found in the brain.

All this means that to promote redomaining we need to have innovation policy institutions that are relatively insulated from academic communities with their internal incentive structures and deference to seniority. It is of course very understandable that senior scientists are concerned with the career advancement of their former students -- just don’t put them in charge of expert committees and then be surprised if there’s no fundamentally new ideas emerging from the programs they propose. The innovation policy community needs to recognize that they can’t just off-load the governance of innovation to scientists who are themselves deeply dependent on social approval from their peers -- and then still expect optimal innovation outcomes.

Don't get me wrong, a lot of the research that scientific communities organize under their own governance is great work. But it tends to be highly incremental -- what Thomas Kuhn called “normal science.” Normal science is science within existing domain structures, science that can be considered domain-reinforcing. But if we want more surprising transformations on the order of the transistor, then we need to have a position in our science funding portfolios that is potentially domain-transcending.

A model for how redomaining could be more explicitly promoted is the research funding model associated with the innovation agency DARPA and its various offshoots, which include ARPA-E. The idea here is to create nimble exploratory research programs even if -- or especially if -- their approaches go against the grain. De facto such programs represent protected spaces in which infant ideas can be raised to greater maturity, before they get in the ring (or shall we say in the cage?) with well-established ideas.

To give you a better sense of what that means in practice, let us dig a bit deeper into our ARPA-E program on low-energy nuclear reactions. When nuclear reactions first started to be modeled around the 1930s, it quickly became commonplace to only consider two atomic nuclei at a time and ignore any other nuclei in their surroundings. The primary reason for this simplification was that the approach made it easier to calculate things and it worked well enough for describing the kinds of nuclear reactions that can be most readily observed in nature (e.g. fusion of hydrogen nuclei in the sun and fission of uranium nuclei in places like Oklo). However, in human labs, we can arrange atomic nuclei in ways where more than two of them interact significantly, which requires a different, more complicated set of modeling approaches (see the comparison in the image below: the two-nuclei fusion rate expression is at the top; a more complicated many-nuclei fusion rate is at the bottom; more details in this paper). The models employed here are known as “many-body models” and they are much less common in nuclear engineering than in other fields of physics such as quantum optics. So our work implies a proposal for redomaining: to tie together certain quantum engineering principles with nuclear physics much more than has been done to date.

What’s exciting is that such quantum engineering principles -- and the more complicated models that describe their impact on nuclear reactions -- promise much greater control over nuclei than previously thought possible. Coming back to the transistor, amazing control has been achieved over electrons in the course of the 20th century. Every smartphone is testimony of their precise and reliable orchestration. The goal that we pursue now is to achieve similar levels of control over nuclei, allowing to not only accelerate the decay of unwanted radioactive materials but also to selectively enhance desirable nuclear reactions such as clean hydrogen fusion reactions that do not produce radioactive byproducts. The ultimate result would be a complete decoupling of nuclear energy and radioactivity. Again, this is an aspiration that has conventionally been considered impossible. But that is precisely why we are excited to work on it.

In the greater scheme of things, research efforts that Challenger it notions of what’s considered possible are still extremely rare. Is that because there are not enough ideas for redomaining? Or because institutions are not set up to promote that?

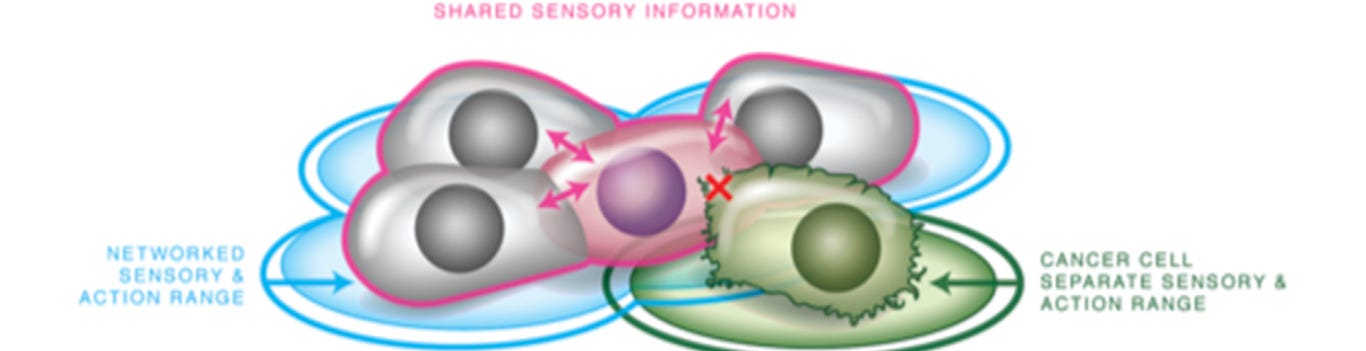

As a matter of fact, there is no shortage of redomaining proposals. Take for instance the case of Michael Levin who proposes a greater inclusion of electricity in biology, proposing to prevent cancer growth. Or Stuart Hameroff whose quantum approach to the brain suggests new techniques to deal with depression and dementia.

Mind you, this doesn’t mean that everyone and their mother’s redomaining proposals need to be taken seriously. But there’s no lack of proposals from experienced, well-trained scientists. The ones in the examples above are all tenured university professors -- and still they have faced massive pushback from peers when putting forth redomaining proposals.

Here, the oversupply of PhDs may also help us. It creates more sources of extremely well-trained scientists that are fairly independent of academic power structures and who may end up contributing redomaining proposals more lightheartedly.

Almost needless to say, not every proposal for redomaining will pan out and result in better technologies. Many may end up being dead ends to be abandoned in due time. However, if we don’t start to promote redomaining more aggressively, we may forever be stuck with our current limitations and never know what is truly possible.